Every strategy I build is tested on my own sites first. Working with me means proven SEO that’s already delivering real results.

Artificial intelligence, or AI, is changing how people make art, write, and communicate. It can write stories, make songs, and create pictures that look like real photos. AI is powerful and can help people do amazing things.

But like any tool, AI can be used in bad ways too. One of the most serious problems is when people use AI to make sexual or adult pictures of real people without asking them first.

Online, this is often linked to something called “Rule 34.” It’s an old internet saying that means if something exists, someone has made adult content about it. When people use AI this way, it means they are making fake sexual images, videos, or stories that look real.

This article explains what “Rule 34” means, how people use AI to make this type of content, why it’s harmful, and what we can do to stop it while still using AI for good purposes.

What Is “Rule 34”?

“Rule 34” started in the early 2000s on online message boards. It began as a joke about how much adult material exists on the internet. People said, “If it exists, there’s porn of it.”

At first, people made this kind of content by hand. Artists drew it or edited photos and videos. But today, AI can make this material automatically in seconds. That makes the problem much bigger and harder to control.

What started as a silly online joke has turned into a serious problem about privacy, consent, and respect.

How AI Helps Create Rule 34 Content

AI uses computer programs called machine learning models. These models learn from huge amounts of data—millions of images, videos, and texts from the internet. Once trained, they can make new pictures, videos, or stories that look like real ones.

AI tools such as image generators or deepfake creators can make realistic people, faces, and even voices. These tools can be used in helpful ways, like for art, movies, or video games. But they can also be used in bad ways—like making fake sexual images of real people.

Here are the main ways AI can be misused:

1. Training Data Problems

AI learns from the data it is given. If that data includes adult or sexual content, the AI can accidentally learn to make similar material. 2. Trick Prompts

Even when companies try to stop AI from making adult images, people can trick the system. They do this by changing their prompts or using secret words so the AI doesn’t recognize the request as adult content.

3. Deepfake Technology

Deepfakes are fake videos or images that use AI to put someone’s face on another person’s body. Some people use this to make fake sexual videos or photos of real people.

4. Text-Based Misuse

AI that writes can also be misused. Some people use writing tools to make fake or sexual stories about real people. Even if it’s not a picture, it can still hurt someone’s reputation and feelings.

How Big Is the Problem?

AI tools are easy to use. Anyone with a computer or phone can create fake images or videos in minutes. No special skills are needed. This has made the problem much larger.

A 2023 study by a group called Deeptrace found that over 95% of all deepfakes on the internet are pornographic.

The problem affects everyone—celebrities, teachers, influencers, and even normal people who post selfies online. Once your photo is public, someone could use it to make a fake image without you ever knowing.

The Harm It Causes

1. No Consent

The biggest problem is consent. People in these fake images never said yes to being shown this way. It’s an invasion of privacy and disrespect for their rights.

2. Emotional Pain

Even though the images are fake, they still hurt. Victims often feel scared, embarrassed, and angry. They may worry about their family, jobs, or friends seeing the fake images. The stress and shame can last for years.

Even a well-crafted website needs the right visibility to succeed, so don’t overlook search engine positioning when working on your SEO. Understanding how to climb Google’s rankings is essential for marketers and agency owners who want their content seen, trusted, and acted upon. Our readers come to us to learn actionable strategies, stay ahead of SEO trends, and turn traffic into real results.

3. Harassment Against Women

Most of the victims are women and girls. This kind of AI use continues long-standing patterns of harassment and objectification online. Many times, public figures or women who speak out online are targeted as a form of revenge or bullying.

4. Damage to Reputation

Once a fake image spreads online, it can ruin someone’s reputation—even if they prove it’s not real. Employers, coworkers, and loved ones may still see or believe the fake content.

5. Legal Problems

Many countries don’t yet have clear laws for AI-generated images or videos. Most existing laws were written before this technology existed. That means victims often have few options to fight back.

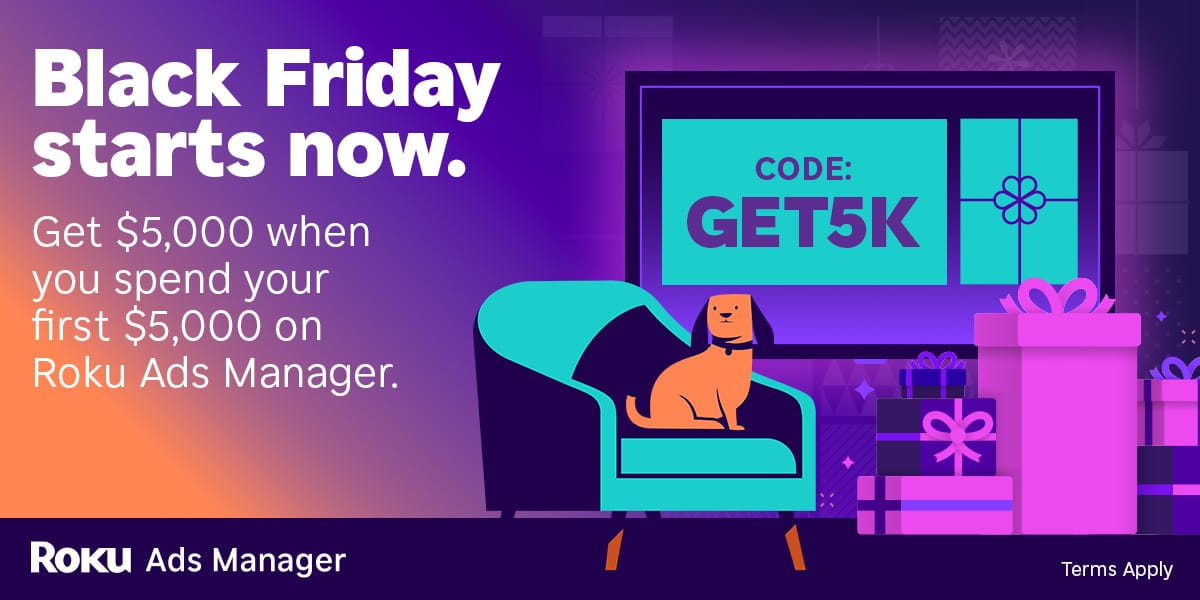

CTV ads made easy: Black Friday edition

As with any digital ad campaign, the important thing is to reach streaming audiences who will convert. Roku’s self-service Ads Manager stands ready with powerful segmentation and targeting — plus creative upscaling tools that transform existing assets into CTV-ready video ads. Bonus: we’re gifting you $5K in ad credits when you spend your first $5K on Roku Ads Manager. Just sign up and use code GET5K. Terms apply.

How Governments Are Responding

Websites like Reddit, TikTok, and X (formerly Twitter) have banned non-consensual explicit AI content. Some use AI detection tools to spot and delete fake images or videos quickly.

3. Tech Company Action

Researchers and companies are developing watermarking tools that mark AI-created images. These digital marks can help people see if something was made by AI. This helps reduce confusion and misuse.

4. Reporting and Enforcement

Some countries now allow victims to report fake AI images directly to the police or online safety groups. Once reported, these images can be removed and the offenders can be investigated.

The Role of AI Developers

AI developers play a central role in preventing misuse. Ethical AI design requires foresight, responsibility, and transparency.

Best Practices for Developers

Filtered Datasets: Train models only on consented, non-explicit data.

Safety Filters: Implement robust safeguards that block or flag explicit outputs.

Prompt Moderation: Monitor for prohibited prompts that attempt to generate adult material.

Transparency: Label AI-generated images clearly to avoid confusion with real photography.

Reporting Systems: Allow users to report harmful or unethical outputs.

By following these principles, AI developers can reduce harm without stifling innovation.

The Responsibility of Users

While developers must build safer tools, users also share responsibility. AI should never be used to humiliate, exploit, or harm others.

Guidelines for Ethical Use

Always get consent before using someone’s image or likeness in generated media.

Avoid sharing or reposting explicit AI-generated material.

Report non-consensual or exploitative content when encountered online.

Support educational campaigns that promote digital respect and empathy.

Responsible AI use begins with human values—respect, consent, and accountability.

The Broader Ethical Debate

AI and Rule 34 raise deeper philosophical questions about technology and morality.

Freedom of Expression vs. Harm: How should societies balance creative freedom with protection from exploitation?

Digital Identity: As AI becomes capable of cloning voices and faces, what does authenticity mean?

Ownership and Consent: Who owns an image generated by AI—especially if it depicts a real person?

Cultural Impact: How do these technologies affect how people view bodies, relationships, and privacy?

Ethicists argue that technology should enhance human dignity, not diminish it. Innovation loses legitimacy when it causes real harm.

Detection and Prevention Technology

Ironically, AI can also be part of the solution. The same machine learning methods that generate harmful content can help detect and remove it.

Detection Techniques

Watermarking: Embedding invisible digital markers in AI-generated images.

Forensic Analysis: Identifying pixel patterns unique to synthetic images.

Reverse Image Search: Finding copies of deepfake content across the web.

AI Classifiers: Training models specifically to recognize explicit synthetic media.

By combining detection with stricter policies, platforms can reduce the spread of non-consensual material significantly.

Education and Awareness

Awareness is crucial. Many people still don’t understand how easy it is to create fake explicit content—or how damaging it can be. Schools, media organizations, and governments can help by teaching:

How to recognize AI-generated images.

The importance of consent and digital ethics.

How to report and remove harmful content.

The psychological impact of digital harassment.

Education encourages responsible technology use and fosters empathy toward victims.

A Path Forward

AI will continue to grow more powerful, and so will the potential for misuse. But this doesn’t mean society must accept harmful content as inevitable. The same creativity and intelligence that drive AI innovation can also build safeguards and ethical frameworks.

Key steps forward include:

Stronger Global Laws: Harmonize regulations across countries to make non-consensual AI pornography illegal everywhere.

Ethical AI Research: Encourage developers to integrate moral reasoning and fairness into model design.

Cross-Industry Collaboration: Unite policymakers, tech firms, and civil society to create shared safety standards.

Empowered Users: Give individuals tools to protect their digital identity and request immediate takedowns.

Cultural Change: Promote a digital culture rooted in respect and empathy rather than exploitation.

Conclusion

The connection between artificial intelligence and Rule 34 reveals both the potential and peril of modern technology.

The solution isn’t to fear AI—it’s to guide it with ethics, law, and compassion. Developers must design with safety in mind. Lawmakers must act decisively. Users must choose respect over curiosity.

Have a quick question? Contact me directly and let’s talk through your SEO needs.